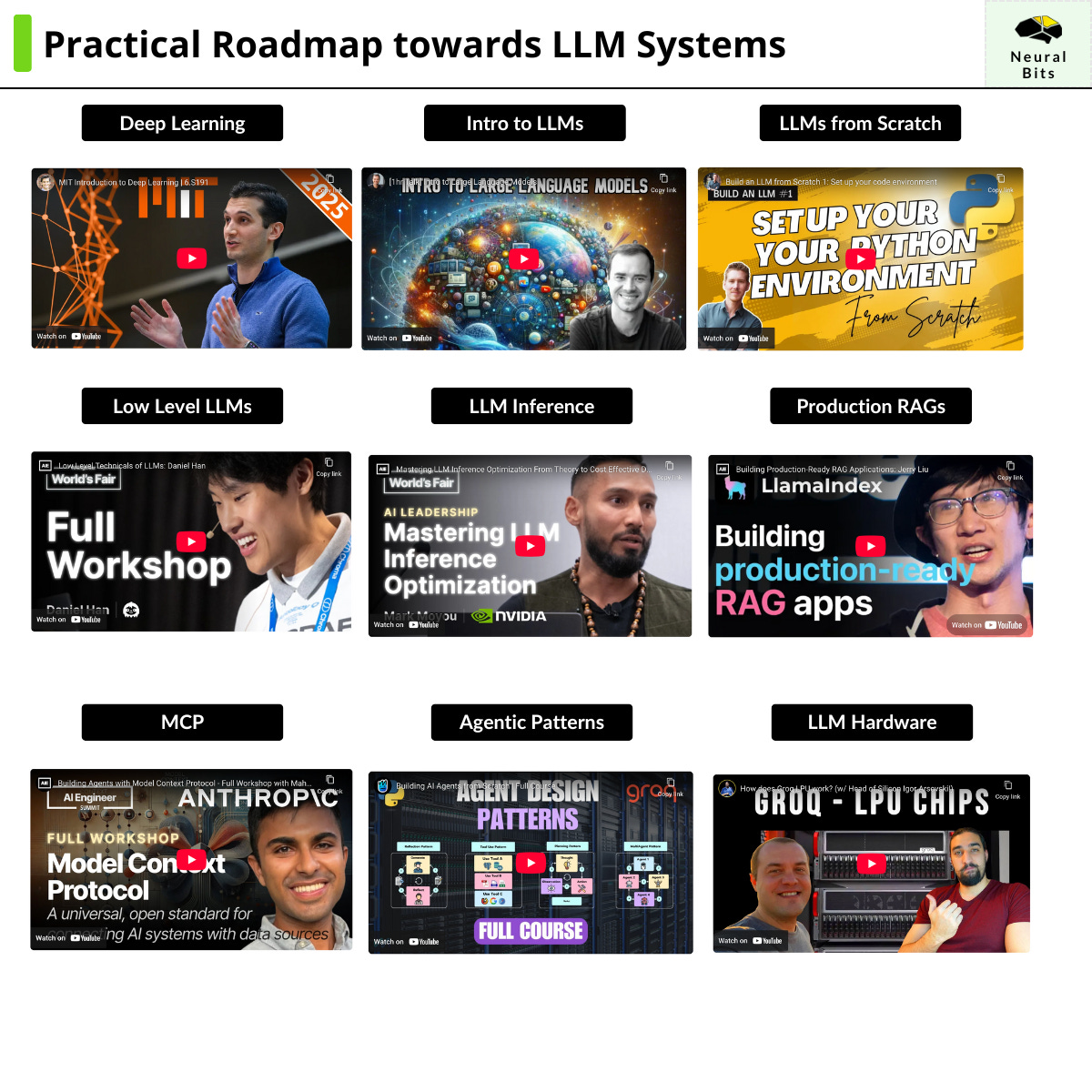

A practical roadmap on LLM Systems

11 hands-on free long videos on understanding advanced LLM Systems.

Won’t make this post too long.

I grouped here a few resources for studying LLM-based systems that helped me upskill as an AI Engineer. Although I’ve been working with Deep Learning and possess a good understanding of both a low-level understanding of technicalities and application integration, there are always a few golden nuggets I learn from re-visiting these resources.

There are many resources on the internet and many experts to learn from. What worked best for me was to focus on component-specific learning rather than a full end-to-end walkthrough. Thus, I’ve tried to sort these resources as a progression of components targeting different LLM Systems areas.

Enjoy!

Table of Contents

MIT Deep Learning 2025 Edition (Alexander Amini, Liquid AI)

Basics of LLMs (A.Karpathy, 3Blue1Brown)

Building LLMs from Scratch (A.Karpathy, S. Raschka)

Low-level technicalities of LLMs (Daniel Han, Unsloth)

Finetuning and Merging (Maxime Labonne, Liquid AI)

Production Ready RAG (Jerry Liu, LlamaIndex)

Agentic RAG (Jerry Liu)

LLM Inference Optimization (Mark Moyou, NVIDIA)

MCP Full Workshop (Anthropic)

Agentic Patterns (Miguel Otero Pedrido)

Extra: Groq LPU for LLM Workloads (Igor, Groq)

1. Deep Learning

Sometimes it feels like the LLMs & GenAI field is sold under the idea that it is separate from traditional ML or Deep Learning, which is false. The rule of thumb to guide yourself by is if any concept or field brings into discussion terms such as “neural network”, “pretraining”, “model architecture” - it’s Deep Learning. This free course from MIT is an updated 2025 version of their popular “Introduction to Deep Learning”, which I recommend you start with.

2. Basics of LLMs

I’ve read multiple articles, completed books, and attended workshops on the LLM & GenAI basics, but by far the best resources I keep going back to are these two.

Andrej Karpathy (1.5h)

3Blue1Brown (30min)

3. Building LLMs from Scratch

To nail down the concepts behind LLMs, including tokens, transformer architecture, layers, and attention mechanism, it’s best if you have someone guide you through coding them.

Andrej’s GPT from scratch series is great. Plus, he’s a great educator and knows how to explain concepts with enough detail.

Sebastian Raschka’s Build an LLM from Scratch is also great for a hands-on walkthrough on building an LLM. Goes on par with his book, which I recommend.

4. Low-level technicalities of LLMs

Daniel and his brother Michael are behind Unsloth, which is a very popular library that allows everyone to fine-tune (i.e., adapt) LLMs on consumer hardware, reducing the memory and compute footprint the fine-tuning process takes.

Daniel here covers multiple low-level details on LLMs, including how normalization and activation functions affect LLM responses, the math behind LLMs, and more.

Andrej here covers Tokenizers and Tokenization, which are core components in any LLM System. Tokenizers are the raw processors/postprocessors that transform natural language text into representations that an LLM model can understand and subsequently convert LLM responses back to natural language.

5. Finetuning and Merging

LLMs are Foundational Models. These models were pretrained on a very large text corpus, which tuned the layer weights to capture context, semantics, making these models capable of producing human-readable outputs. However, they are generalistic in most of the use cases, and to adapt for a specific field (i.e, medicine, math, programming) or task, they have to be fine-tuned with a set of samples representing the task/field in sight.

Maxime unpacks the concept behind fine-tuning and also covers model merging, which refers to the process of taking learned “behaviours” of different fine-tuned models and averaging/merging them into a single model.

6. Production Level RAGs

As a progression to fine-tuning, RAG (i.e. Retrieval Augmented Generation) is a popular design for building a large majority of the current LLM Systems. RAG refers to injecting additional context from a task-specific knowledge base into the LLM, helping the system return accurate responses and directly tackling the common “Hallucination” problem in all LLMs.

Jerry Liu explains the concept of RAG from the ground up, providing expert insights on all components of a solid RAG System (i.e, Embeddings, Queries, Vector Similarity)

7. Agentic RAG

Traditional RAG Systems fetch relevant context from a fixed knowledge base. Agentic RAG brings AI Agents into the mix, letting an agent decide and carry out a course of action by itself. Instead of relying on a fixed knowledge base, agents can route and select which context to get from which source.

In this one, Jerry (same person as on #6) presents the integration of Agents in RAG Systems quite nicely, discussing tool calling, agent orchestration, agent reflection, and more.

8. LLM Inference Optimization

This is a topic I love and enjoy studying or reading about. One of the current problems with LLM Systems is scale and efficiency. Due to their internal work, they require lots of GPU Compute Power. Commonly, there are two ways to look at this problem:

Larger models = better quality = more compute.

Smaller models = poorer quality = less compute.

If you like a quicker intro to understanding why LLMs are challenging to parallelize, please see my previous article:

Mark Moyou covers here everything you need to know and understand about the challenges of building scalable LLM solutions, starting from hardware, how LLMs work, challenges of parallelizing LLM workloads, and building up to best practices when doing so. I strongly recommend it!

9. MCP Full Workshop

MCP stands for Model Context Protocol, which is a new standard proposed by Anthropic that became popular once AI Agents came into play. Think of it as a standardized interface you build that exposes only specific endpoints for your agents. These endpoints are interpreted as tools and might be reading from a database, performing a Google Search, manipulating files, generating reports, or reviewing a merge request - the possibilities are many.

Mahesh describes in depth the Client-Server structure, the concept of MCP, and how to build MCP Servers and more, packed with detail.

On the same topic, here’s a quick bonus video with insights on building AI Agents I think you’ll like.

10. Agentic Patterns

Agentic Patterns bring structure to how we build LLM Systems that make use of agents.

- unpacks here the 4 common agentic patterns, building each one from scratch in pure Python - a great hands-on tutorial!

11. Extra: Groq LPU for LLM Inference

This video is one year old, but packed with low-level details that I still revisit periodically. In short, Groq is one of the few companies that decided to build hardware to tackle the bottlenecks LLM Inference has on current existing GPUs.

The benchmark for throughput on LLM Inference is defined by the TPS metric (i.e., Tokens per second), and Groq is leading in this area.

Igor (Head of Silicon at Groq) presents the Groq LPU chip architecture, and goes low-level into hardware details such as transistors, cooling system, architecture design, and more.

Conclusion

In this article, I’ve grouped 11 long-term videos, with some being entire playlists that revolve specifically around LLM Systems. The goal of these is to provide a progression of resources into advanced LLM concepts, and I think they’ll help you as much as they’ve helped me pick up new concepts and reinforce the ones I already knew.

On your next study session, I recommend watching any of these, as surely you’ll learn valuable information that’ll help you in your AI Engineering journey.

In case you have missed, I’m working on an advanced and practical course on AI Systems where I’ll guide you through building each component and unpacking each concept.

I’ve opened a poll to get audience feedback to help me refine and better adapt it, please vote here:

Thanks, see you next week!

Bookmarked for later. Thanks Alex ;)

Great list! Bookmarked it for later ;)